¶ Mise en place cluster Ceph

¶ Base

Template vmware debian 12.4

Le template permet au hosts de se connecter entre eux via ssh en tant que root.

Gestion ip via dhcp

Adresses definies dans le DNS

¶ Infrastructure

Domaine : dell.stef.lan

| hostname | Mac | IP | Disk Additionnel |

|---|---|---|---|

| ceph-node01 | 00:50:56:2B:F5:5C | 192.168.70.41 | /deb/sdb |

| ceph-node02 | 00:50:56:29:0A:F3 | 192.168.70.42 | /deb/sdb |

| ceph-node03 | 00:0C:29:F9:E3:23 | 192.168.70.43 | /deb/sdb |

| ceph-s3 | NA | NA | NA |

¶ Préparation

Mise a jour hostname

Exemple pour ceph-node01

hostnamectl set-hostname ceph-node01

Pensze à mettre à jour vos /etc/hosts

¶ Pre-requis sur l'ensemble des hosts

apt install -y podman python3 lvm2

¶ Installation cephadm

Sur ceph-node01

apt install -y curl

curl --silent --remote-name --location https://github.com/ceph/ceph/raw/pacific/src/cephadm/cephadm

chmod +x cephadm

sudo mv cephadm /usr/local/bin/

cephadm bootstrap --mon-ip 192.168.70.41 --allow-fqdn-hostname

Verifying IP 192.168.70.41 port 3300 ...

Verifying IP 192.168.70.41 port 6789 ...

Mon IP `192.168.70.41` is in CIDR network `192.168.70.0/24`

Mon IP `192.168.70.41` is in CIDR network `192.168.70.0/24`

Internal network (--cluster-network) has not been provided, OSD replication will default to the public_network

Pulling container image quay.io/ceph/ceph:v16...

... snip ...

Fetching dashboard port number...

Ceph Dashboard is now available at:

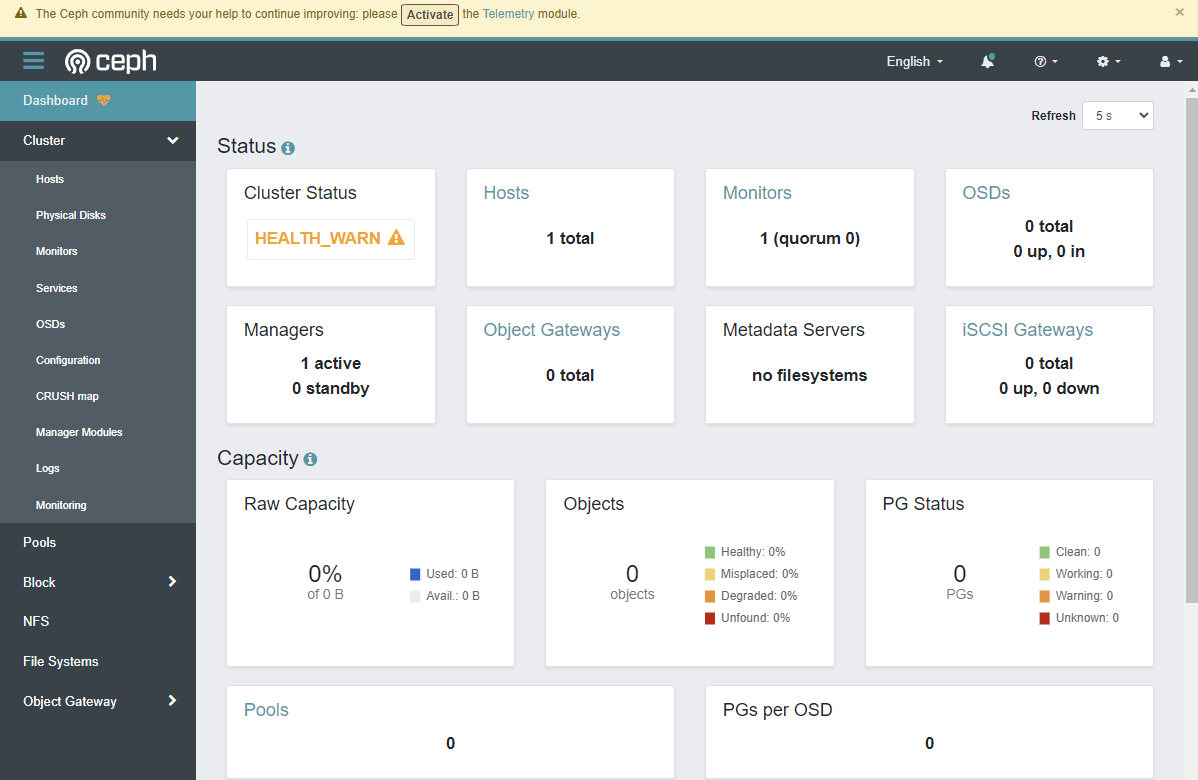

URL: https://ceph-node01.dell.stef.lan:8443/

User: admin

Password: 9014bm17fd

Enabling client.admin keyring and conf on hosts with "admin" label

Enabling autotune for osd_memory_target

You can access the Ceph CLI as following in case of multi-cluster or non-default config:

sudo /usr/local/bin/cephadm shell --fsid 924a0466-9f38-11ee-96ea-0050562bf55c -c /etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyring

Or, if you are only running a single cluster on this host:

sudo /usr/local/bin/cephadm shell

Please consider enabling telemetry to help improve Ceph:

ceph telemetry on

For more information see:

https://docs.ceph.com/en/pacific/mgr/telemetry/

Bootstrap complete.

Test acces shell

root@ceph-node01:~# sudo /usr/local/bin/cephadm shell

Inferring fsid 924a0466-9f38-11ee-96ea-0050562bf55c

Using recent ceph image quay.io/ceph/ceph@sha256:7278e7da3b60c0ed54a1960371c854632caab27f0d6c90d6812f2a36b22b8f9a

root@ceph-node01:/# ceph -s

cluster:

id: 924a0466-9f38-11ee-96ea-0050562bf55c

health: HEALTH_WARN

OSD count 0 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum ceph-node01 (age 12m)

mgr: ceph-node01.xjlisb(active, since 8m)

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

root@ceph-node01:/# ceph -v

ceph version 16.2.14 (238ba602515df21ea7ffc75c88db29f9e5ef12c9) pacific (stable)

Acces dashboard dans l'exemple: https://ceph-node01.dell.stef.lan:8443/

¶ Deploiement de la cle ssh ceph sur tout les hsts

ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph-node02

ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph-node03

¶ Ajout OSD:

sudo /usr/local/bin/cephadm shell

Ajout des deux autre noeuds:

root@ceph-node01:/# ceph orch host add ceph-node02

Added host 'ceph-node02' with addr '192.168.70.42'

root@ceph-node01:/# ceph orch host add ceph-node03

Added host 'ceph-node03' with addr '192.168.70.43'

Tag des 3 hosts en OSD

root@ceph-node01:/# ceph orch host label add ceph-node01 osd

Added label osd to host ceph-node01

root@ceph-node01:/# ceph orch host label add ceph-node02 osd

Added label osd to host ceph-node02

root@ceph-node01:/# ceph orch host label add ceph-node03 osd

Added label osd to host ceph-node03

Lister les stockages disponibles:

root@ceph-node01:/# ceph orch device ls

HOST PATH TYPE DEVICE ID SIZE AVAILABLE REFRESHED REJECT REASONS

ceph-node01 /dev/sdb hdd 20.0G Yes 13m ago

ceph-node02 /dev/sdb hdd 20.0G Yes 112s ago

ceph-node03 /dev/sdb hdd 20.0G Yes 12s ago

Attendre un peu si tout les nodes n'apparaissent pas ..

Exemple:

root@ceph-node01:/# ceph orch device ls

HOST PATH TYPE DEVICE ID SIZE AVAILABLE REFRESHED REJECT REASONS

ceph-node01 /dev/sdb hdd 20.0G Yes 21m ago

ceph-node02 /dev/sdb hdd 20.0G Yes 104s ago

root@ceph-node01:/# ceph orch device ls

HOST PATH TYPE DEVICE ID SIZE AVAILABLE REFRESHED REJECT REASONS

ceph-node01 /dev/sdb hdd 20.0G Yes 21m ago

ceph-node02 /dev/sdb hdd 20.0G Yes 117s ago

ceph-node03 /dev/sdb hdd 20.0G Yes 4s ago

Ajout du stockage

root@ceph-node01:/# ceph orch daemon add osd ceph-node01:/dev/sdb

Created osd(s) 0 on host 'ceph-node01'

root@ceph-node01:/# ceph orch daemon add osd ceph-node02:/dev/sdb

Created osd(s) 1 on host 'ceph-node02'

root@ceph-node01:/# ceph orch daemon add osd ceph-node03:/dev/sdb

Created osd(s) 2 on host 'ceph-node03'

Vérification de l'etat du cluster

root@ceph-node01:/# ceph -s

cluster:

id: 924a0466-9f38-11ee-96ea-0050562bf55c

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-node01,ceph-node02,ceph-node03 (age 119s)

mgr: ceph-node01.xjlisb(active, since 60m), standbys: ceph-node02.bejrod

osd: 3 osds: 3 up (since 2s), 3 in (since 19s)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 581 MiB used, 39 GiB / 40 GiB avail

pgs:

¶ Creation d'un cephfs

root@ceph-node01:/# ceph osd pool create cephfs_data replicated

pool 'cephfs_data' created

root@ceph-node01:/# ceph osd pool create cephfs_metadata

pool 'cephfs_metadata' created

root@ceph-node01:/# ceph osd pool set cephfs_data size 2

set pool 3 size to 2

root@ceph-node01:/# ceph osd pool set cephfs_metadata size 2

set pool 4 size to 2

root@ceph-node01:/# ceph osd pool set cephfs_data bulk true

root@ceph-node01:/# ceph orch apply mds cephfs01 --placement="2 ceph-node02 ceph-node03"

root@ceph-node01:/# ceph fs new cephfs01 cephfs_data cephfs_data

new fs with metadata pool 3 and data pool 3

root@ceph-node01:/# ceph -s

cluster:

id: 5f3802a4-9f14-11ee-80ae-00505633a142

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-node01,ceph-node02,ceph-node03 (age 18m)

mgr: ceph-node01.pwyeje(active, since 34m), standbys: ceph-node02.lyfhkb

mds: 1/1 daemons up, 1 standby

osd: 3 osds: 3 up (since 14m), 3 in (since 15m)

data:

volumes: 1/1 healthy

pools: 3 pools, 161 pgs

objects: 22 objects, 2.3 KiB

usage: 871 MiB used, 59 GiB / 60 GiB avail

pgs: 161 active+clean

¶ Montage du ceph fs sur client

¶ Prerequis

root@swarm-node01:~# apt install -y ceph-common

Ajouter le fichier /etc/ceph/ceph.conf donner par

cat /etc/ceph/ceph.conf sur ceph-node01

[global]

fsid = 924a0466-9f38-11ee-96ea-0050562bf55c

mon_host = [v2:192.168.70.41:3300/0,v1:192.168.70.41:6789/0] [v2:192.168.70.42:3300/0,v1:192.168.70.42:6789/0] [v2:192.168.70.43:3300/0,v1:192.168.70.43:6789/0]

¶ Génération du keyring pour acces:

Sur ceph-node01

root@ceph-node01:/# ceph fs ls

name: cephfs01, metadata pool: cephfs_data, data pools: [cephfs_data ]

root@ceph-node01:/# ceph auth ls

mds.cephfs01.ceph-node02.xlwatj

key: AQBhsoJlsLkbIxAAWTw2DH7VMRC+guI4BRgQSw==

caps: [mds] allow

caps: [mon] profile mds

caps: [osd] allow rw tag cephfs *=*

...

mgr.ceph-node02.lyfhkb

key: AQD6rYJlBxeUGBAAKf7+Uctj4yFDFO7B6cQKUQ==

caps: [mds] allow *

caps: [mon] profile mgr

caps: [osd] allow *

installed auth entries:

Creation du keyring pour acces cephfs

root@ceph-node01:/# ceph fs authorize cephfs01 client.all / rw

[client.all]

key = AQCOF4NlTQREGhAAkTsDf1BdDQeJ56YETl7gwQ==

root@ceph-node01:/# ceph auth get client.all

[client.all]

key = AQCOF4NlTQREGhAAkTsDf1BdDQeJ56YETl7gwQ==

caps mds = "allow rw fsname=cephfs01"

caps mon = "allow r fsname=cephfs01"

caps osd = "allow rw tag cephfs data=cephfs01"

exported keyring for client.all

sur les cible swarm-node01

Creer le fichier /etc/ceph/ceph.client.all.keyring contenant le resultat ci-dessus

Ajoutons le montage dans /etc/fstab comme suis

192.168.70.42,192.168.70.43,192.168.70.44:6789:/ /mnt/ceph ceph _netdev,name=all,fs=cephfs01 0 2

Montage:

sudo systemctl daemon-reload

sudo mount -a

mkdir /mnt/ceph/swarm

sudo chown stef:stef /mnt/ceph/swarn

¶ Restriction

Demontage

stef@swarm-node01:/etc/ceph$ sudo umount /mnt/ceph

Ajoutons un acces uniquement au sous repertoire /swarm

root@ceph-node01:/# ceph fs authorize cephfs01 client.swarm /swarn rw

[client.swarm]

key = AQBDFoNlh/DnGBAAcNX84l8fur/T/kml/RZcSw==

Exportation du keyring

root@ceph-node01:/# ceph auth get client.swarm

[client.swarm]

key = AQBDFoNlh/DnGBAAcNX84l8fur/T/kml/RZcSw==

caps mds = "allow rw fsname=cephfs01 path=/swarm"

caps mon = "allow r fsname=cephfs01"

caps osd = "allow rw tag cephfs data=cephfs01"

Sur les clients ( swarm-node01 & 02 dans l'exemple)

Copier ce contenu dans sur le client en

/etc/ceph/ceph.client.swarm.keyring

Modifier le fstab en ajoutant la ligne:

192.168.70.42,192.168.70.43,192.168.70.44:6789:/swarm /mnt/ceph ceph _netdev,name=swarm,fs=cephfs01 0 2

sudo systemctl daemon-reload

sudo mount -a

Faire la même operation sur swarn-node02 et swarn-node03

¶ Activation du delete pools

ceph tell mon.* injectargs --mon_allow_pool_delete true

¶ Gestion Certificats

sudo /usr/local/bin/cephadm shell -m ./certificats/

root@ceph-node01:/# ls /mnt/

ceph+dashboard+dell.crt ceph+dashboard+dell.key

root@ceph-node01:/# ceph dashboard set-ssl-certificate -i /mnt/ceph+dashboard+dell.crt

SSL certificate updated

root@ceph-node01:/# ceph dashboard set-ssl-certificate-key -i /mnt/ceph+dashboard+dell.key

SSL certificate key updated

¶ Activation RadosGW

Voir https://docs.ceph.com/en/latest/cephadm/services/rgw/

root@ceph-node01:/# ceph orch apply rgw foo

sudo /usr/local/bin/cephadm shell

ceph orch host label add ceph-node02 rgw

ceph orch host label add ceph-node03 rgw

root@ceph-node01:/# ceph orch apply rgw foo

Via l'interface creer un utilisateur puis lui associé un bucket

Sur swarm-node01

Créer le fichier de password /etc/ceph/.passwd-ceph-stef

Contenant

access_key:secret_key

Vous pouvez récuperer ces informations sois via le dashboard soit via cephadm

root@ceph-node01:~# sudo /usr/local/bin/cephadm shell

root@ceph-node01:/# radosgw-admin user info --uid=stef

{

"user_id": "stef",

"display_name": "stef",

...

"keys": [

{

"user": "stef",

"access_key": "S6Jxxxxxx1XCP",

"secret_key": "KARxxxxxxxxxxxxxxxxxxxxx3IX47C"

}

],

...

}

Ajouter dans /etc/fstab

stefbuck:/ /mnt/ceph-bucket fuse.s3fs _netdev,allow_other,passwd_file=/etc/ceph/.passwd-ceph-stef,url=http://ceph-node02.dell.stef.lan,use_path_request_style 0 0